Case: Two Scenarios

Client:

Saving a tech demo in 72 hours

Disclaimer: The data used in this case study is based on publicly available sources, and details of the scenario have been adjusted for illustrative purposes.Technologies used

Python, MySQL, Docker

Background

The client, Two Scenarios, needed to import historical pharmaceutical pricing data into their own database to power a Tableau dashboard and

Their original tech demo, showcasing the pricing dashboard, had to be postponed due to delays.

We were brought in the following Monday to help complete the project and ensure everything was ready in time for the rescheduled demo by the end of the week. Along the way, we also introduced several optimisations to the codebase to improve maintainability and performance.

Challenges

We identified the following challenges within the codebase as received:

The data came from a legacy system and was incompatible with modern Python libraries. Importing the pricing data would result in corrupted characters and missing data. The previous developer had attempted to solve the problem but was unsuccessful.

The original database did not distinguish between the frequently changing fields such as prices, and static fields such as product names. This caused significant data duplication, leading to performance problems in Tableau and significantly higher storage costs.

Solution

All software needs a strong and stable foundation. We therefore started by addressing the architectural shortcomings, rewriting tightly coupled logic so that each component could function independently. This made future development faster, easier, and made major optimisations possible. It was now also possible to implement several automated tests to ensure reliability and consistency.

Following some detective work, we identified the original encoding and built a custom converter allowing us to migrate and read the data uncorrupted. This data was then stored in a modern format (UTF-8).

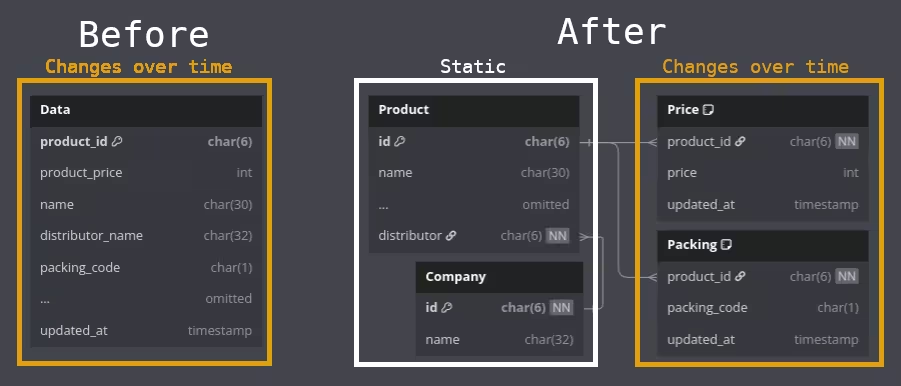

Together with the client, we scoped what data was relevant for the dashboard. We also identified which fields were static and which were dynamic. This revealed that only the pricing and "packing code" changed over time. This also revealed a potential bug in the original dashboard: if a company changed its name, it was treated as a different entity, leading to data anomalies. We then designed a new normalised database schema as shown below:

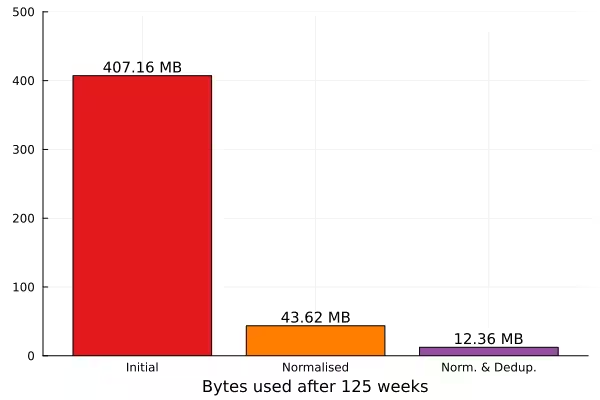

This change removed the potential of data anomalies and reduced the database size by an order of magnitude. These savings also directly correlated to the amount of time it took to load the data into Tableau.

Another insight was that every new data file of data from the source contained a full snapshot of all prices and codes, even if a product didn't change since the last snapshot. This meant that we could get even greater savings by discarding unchanged entries between snapshots. Combining the normalised database schema and this deduplication scheme, we further reduced the overall data size by 33x.

To ensure a successful tech demo, we made some final modifications to how data was being imported. Namely, we made the system incrementally fetch the data from from most recent and back in small batches. This meant that the client was able to begin preparing their demo before the full import was complete as well as preventing the potential data loss that would occur if the import was stopped halfway.

Everything but the deduplication scheme was completed within the first 48 hours of the project.

Outcome

The project remained within budget and with enough time left for the client to implement the tech demo and meet their deadline. Beyond immediately making it possible to finish the tech demo in time, the codebase was refactored to be significantly more maintainable and versatile. The more efficient schema also enabled the client to process more data without overloading Tableau or having to sacrifice data quality.